码丁实验室,一站式儿童编程学习产品,寻地方代理合作共赢,微信联系:leon121393608。

案例一:爬取微博天气

网页链接:http://weather.sina.com.cn

创建项目

scrapy startproject weather

在 weatheritems.py中输入以下代码

import scrapy

class WeatherItem(scrapy.Item):

city = scrapy.Field()

date = scrapy.Field()

dayDesc = scrapy.Field()

dayTemp = scrapy.Field()

在 weatherspiders 目录中新建 spider.py 并输入以下代码

import scrapy

from weather.items import WeatherItem

class WeatherSpider(scrapy.Spider):

name = "myweather"

allowed_domains = ["sina.com.cn"]

start_urls = ['http://weather.sina.com.cn']

def parse(self, response):

item = WeatherItem()

item['city'] = response.xpath('//*[@id="slider_ct_name"]/text()').extract()

tenDay = response.xpath('//*[@id="blk_fc_c0_scroll"]');

item['date'] = tenDay.css('p.wt_fc_c0_i_date::text').extract()

item['dayDesc'] = tenDay.css('img.icons0_wt::attr(title)').extract()

item['dayTemp'] = tenDay.css('p.wt_fc_c0_i_temp::text').extract()

return item

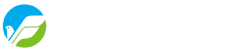

项目结构如图

scrapy1.jpg

执行程序

执行命令1:scrapy crawl myweather

执行命令2:scrapy crawl myweather -o myweather.json

案例二:爬取百度贴吧数据

网页链接:https://tieba.baidu.com/f?kw=%E7%BD%91%E7%BB%9C%E7%88%AC%E8%99%AB&ie=utf-8&pn=0

创建项目

scrapy startproject tieba

在 tiebaitems.py中输入以下代码

import scrapy

class tiebaitem(scrapy.Item):

title = scrapy.Field()

author = scrapy.Field()

reply = scrapy.Field()

在 tiebaspiders 目录中新建 spider.py 并输入以下代码

import scrapy

from tieba.items import tiebaitem

class BbsSpider(scrapy.Spider):

name = "tieba"

allowed_domains = ["baidu.com"]

start_urls = ["https://tieba.baidu.com/f?kw=%E7%BD%91%E7%BB%9C%E7%88%AC%E8%99%AB&ie=utf-8&pn=0", ]

def parse(self, response):

item = tiebaitem()

item['title'] = response.xpath('//*[@id="thread_list"]/li/div/div[2]/div[1]/div[1]/a/text()').extract()

item['author'] = response.xpath('//*[@id="thread_list"]/li/div/div[2]/div[1]/div[2]/span[1]/span[1]/a/text()').extract()

item['reply'] = response.xpath('//*[@id="thread_list"]/li/div/div[1]/span/text()').extract() # TODO 前两条评论无法输出

yield item

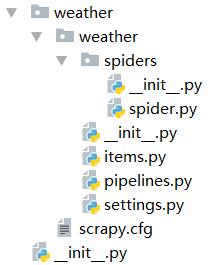

项目结构如图

scrapy2.jpg

执行程序

执行命令1:scrapy crawl tieba

执行命令2:scrapy crawl tieba -o tieba.json

案例三:爬取当当商品列表数据

网页链接:http://weather.sina.com.cn

创建项目

scrapy startproject autopjt

在 autopjtitems.py中输入以下代码

import scrapy

class AutopjtItem(scrapy.Item):

# 定义好name用来存储商品名

name = scrapy.Field()

# 定义好price用来存储商品价格

price = scrapy.Field()

# 定义好comnum用来存储商品评论数

comnum = scrapy.Field()

# 定义好link用来存储商品链接

link = scrapy.Field()

在 autopjtspiders 目录中新建 spider.py 并输入以下代码

import scrapy

from autopjt.items import AutopjtItem

from scrapy.http import Request

class AutospdSpider(scrapy.Spider):

name = "autospd"

allowed_domains = ["dangdang.com"]

start_urls = (

'http://category.dangdang.com/pg1-cid4004279.html',

)

def parse(self, response):

item = AutopjtItem()

# 通过各Xpath表达式分别提取商品的名称、价格、链接、评论数等信息

item["name"] = response.xpath("//a[@class='pic']/@title").extract()

item["price"] = response.xpath("//span[@class='price_n']/text()").extract()

item["link"] = response.xpath("//a[@class='pic']/@href").extract()

item["comnum"] = response.xpath("//a[@name='itemlist-review']/text()").extract()

# 提取完后返回item

yield item

# 接下来很关键,通过循环自动爬取76页的数据

for i in range(2, 77):

# 通过上面总结的网址格式构造要爬取的网址

url = "http://category.dangdang.com/pg" + str(i) + "-cid4004279.html"

# 通过yield返回Request,并指定要爬取的网址和回调函数

# 实现自动爬取

yield Request(url, callback=self.parse)

在 autopjtpipelines.py中输入以下代码

import codecs

import json

class AutopjtPipeline(object):

def __init__(self):

# 此时存储到的文件是mydata2.json,不与之前存储的文件mydata.json冲突

self.file = codecs.open('D:/DataguruPyhton/PythonSpider/images/当当手机.txt', "wb", encoding="utf-8")

def process_item(self, item, spider):

# 每一页中包含多个商品信息,所以可以通过循环,每一次处理一个商品

# 其中len(item["name"])为当前页中商品的总数,依次遍历

for j in range(0, len(item["name"])):

# 将当前页的第j个商品的名称赋值给变量name

name = item["name"][j]

price = item["price"][j]

comnum = item["comnum"][j]

link = item["link"][j]

# 将当前页下第j个商品的name、price、comnum、link等信息处理一下,重新组合成一个字典

goods = {"name": name, "price": price, "comnum": comnum, "link": link}

# 将组合后的当前页中第j个商品的数据写入json文件

i = json.dumps(dict(goods), ensure_ascii=False)

line = i + 'n'

self.file.write(line)

# 返回item

return item

def close_spider(self, spider):

self.file.close()

在 autopjtsettings.py中输入以下代码

BOT_NAME = 'autopjt'

SPIDER_MODULES = ['autopjt.spiders']

NEWSPIDER_MODULE = 'autopjt.spiders'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Disable cookies (enabled by default)

COOKIES_ENABLED = False

# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'autopjt.pipelines.AutopjtPipeline': 300,

}

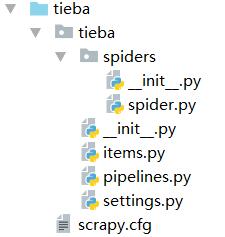

项目结构如图

scrapy3.jpg

执行程序

执行命令:scrapy crawl autopjt